ACM Human Factors in Computing Systems (CHI), 2020

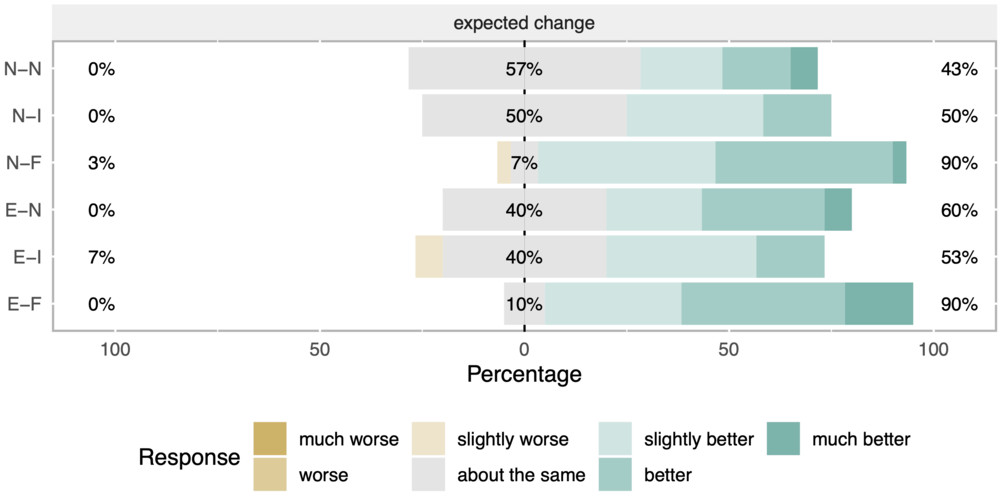

Participant's ratings of their expected model changes show: (1) In general, participants expected improvements (green bars), but more in feature-level feedback conditions (E[xpectation]-F[eature-level feedback] and N[o expectation]-F[eature-level feedback]). (2) More surprisingly, a large portion of participants who did not provide any feedback to the model (conditions *-N[o feedback]) thought the model would somehow correct itself.

Abstract

Automatically generated explanations of how machine learning (ML) models reason can help users understand and accept them. However, explanations can have unintended consequences: promoting over-reliance or undermining trust. This paper investigates how explanations shape users' perceptions of ML models with or without the ability to provide feedback to them: (1) does revealing model flaws increase users' desire to "fix" them; (2) does providing explanations cause users to believe--wrongly--that models are introspective, and will thus improve over time. Through two controlled experiments--varying model quality--we show how the combination of explanations and user feedback impacted perceptions, such as frustration and expectations of model improvement. Explanations without opportunity for feedback were frustrating with a lower quality model, while interactions between explanation and feedback for the higher quality model suggest that detailed feedback should not be requested without explanation. Users expected model correction, regardless of whether they provided feedback or received explanations.